Almost every econ PhD student knows the following two articles, but one more hyperlink in the vast ocean of the internet cannot harm. These are pieces of advice to young economists that come from two academics that are probably at rather opposite ends of the spectrum, both in terms of political views and in terms of writing style. Classic read.

John Cochrane: "Writing Tips for Ph. D. Students"

Paul Krugman: "How I Work."

Wednesday, 26 March 2014

Friday, 21 March 2014

Wouter den Haan on the state of modern macro

This Wednesday, my advisor Wouter den Haan gave a public lecture at the LSE titled "Is Everything You Hear About Macroeconomics True?". It was very stimulating. While I disagreed on some points (on which possibly more later), I was impressed by the earnesty with which he criticised the profession and his own work, and at the same time sharply drew the line to unwarranted criticism often heard from journalists and politicans.

Excerpt:

You can listen to the whole thing here and also download the slides.

Excerpt:

Often we get accused of not being open to alternative approaches. I think that's somewhat true. I think people are always somewhat defensive of things which are new. Especially things that are going to threaten your human capital. If you put a lot of effort into building up this human capital to be able to work with these mathematical [DSGE] models, you're not that happy if the new guy says: "No, let's do it another way." But I also think that these alternative approaches would get a lot more attention if they used the same language as the dominant paradigm (for example things like "efficient markets") and if they also understood better what the challenges are.

In particular, the challenges are not so much to generate a crash - our models can do that, too - or to generate volatile asset prices, or to have models that are better than these protoypes [RBC and New-Keynesian models]. The hard part is, if you discipline yourself by choosing parameters, to then get interesting action. There is a bunch of PhD students here, and they know that the hardest part is not to have an idea. The hardest part is to have an idea that is actually going to be quantitatively important if you discipline yourself in choosing things like preferences, technology and market structure.

You can listen to the whole thing here and also download the slides.

Thursday, 20 March 2014

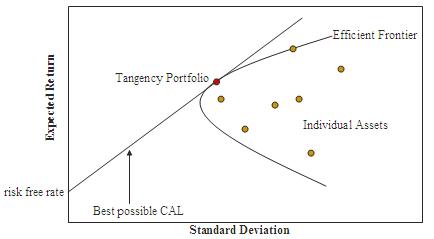

Fallacy of the week: higher risk implies higher return

I remember a conversation at my local bank branch when I wanted to open a small securities account. Their financial advisor asked me what assets I wanted to invest in and offered advice in allocating the portfolio. Most of what she said sounded extremely sensible, but that was mainly because she was a good saleswoman and I hadn't prepared myself well for the meeting.

One part of her advice was that I could choose to invest in equity mutual funds or individual stocks. While the latter would be much more risky, it would also offer the chance of a much higher return.

Over the years, I have heard this argument time and again. In it's general form, it goes somewhat like this:

"Here's an investment opportunity. It's quite risky, but as you know, people who only accept low risk only get low returns. So if you want a high return, invest now!"

The argument sounds somewhat intuitive to me. After all, it's just a description of risk premia, right? Markets reward high risk strategies with high returns. But just as this argument is intuitive, it is also dangerously wrong. Why? Because if you believe it, you will put your hard-earned savings at unnecessary risk by not sufficiently diversifying your portfolio.

This post does not contain any deep thoughts about economics. But I write it because my personal observation suggests that many small individual investors run more or less unconsciously into this simple fallacy.

To illustrate it a little more, suppose you are indeed a small individual investor (if you bother to read this far then you almost certainly are). You can invest money into two stocks, Coca-Cola and Pepsi. You expect both to yield an average return of 4% over the next year. But being stocks, they're both risky: You think that 90% of the time, the return of each stock will fluctuate between 0% and 8%. Now, you could invest all your money in either Coke or Pepsi. Or you can invest half the money in each. If you do the latter, your return will be within 0% and 8% less than 90% of the time, because in order for the return to fall below 0% you need both companies to do badly instead of just one. So this reduces your risk. And what's your expected return? It's the average of 4% and 4%, in other words... still 4%!

You can go ahead and go stockpicking here, and expose yourself to higher risk. Will it earn you higher returns? Sometimes, but on average: not at all. So sober up and stop gambling with your savings.

And what I say does not only apply to stockpicking. It also applies to pouring a lot of money into the purchase of real estate, or putting it all into an equity stake of that tech/green/tax-evading start-up company. Yes, this is very risky because if that one asset implodes your money is gone. But at the same time, you will often also not get a higher return for taking that risk.

Here's a slightly more technical version of the argument. Asset pricing theory tells us that it is not variance which markets compensate with high returns, but covariance with the discount factor: $$ E[R_i]=R_0 (1 - Cov(Q,R_i))$$ where \(R_0\) is the (hypothetical, if you wish) risk-free rate. In the special case of quadratic utility of an investor with a fixed sum to invest over one period, we get the CAPM formula $$ E[R_i]-R_0=\beta_i (E[R^m]-R_0) \text{ with }\beta_i=\frac{Cov(R^m,R_i)}{Var(R^m)}$$ where \( R^m\) is the return on the "market portfolio", the asset allocation of the marginal investor. Now let's rewrite the return on security i as \( R_i = ( R_i - \beta_i R^m) + \beta_i R^m = R_i^d + R_i^{nd}\). Clearly we have $$ E[R^d_i]=R_0 \text{ and } E[R^{nd}_i]=E[R_i]$$ So the market compensates only the risk of the non-diversifiable component \(R^{nd}_i\), but not that of the diversifiable component \(R^d_i\): We have $$\beta_i=\frac{Var[R^{nd}_i]}{Var[R^m]} \neq \frac{Var[R_i]}{Var[R^m]}$$ The fallacy is to put an "=" sign in the last line. Do you think nobody in his right mind would make that mistake? Well, the German Wikipedia does, stating that \(\beta_i \) would be the relative volatility of an asset relative to the market... I would bet that a lot of people read this or similar expositions and then go on picking their favourite stocks instead of just buying the bloody ETF.

Now you might say: "Wait, I still want to buy Google instead of Apple stock because I know that that company is better. I got business sense!" Except this is not about business sense. This is not about knowing which company has a good business model and which doesn't. This is about figuring out which securities are under- or overvalued relative to where they will be in the future. Can you analyse this while working a full-time non-finance job and taking care of the kids on the weekends?

There is one potent argument against diversification of course: costs. Actively managed mutual funds take hefty management fees, so with that strategy you might really need to trade off significantly lower returns in exchange for lower risk. With the rise of ETFs and online brokerage accounts, however, it is nowadays very cheap to buy diversified securities or just build your own diversified portfolio. The case is probably less clear for investing in real estate or non-public companies. Real estate and private equity funds for small individual investors do exist, but here the fees are still pretty high. Still, just because it is costly for you to diversify a risky investment strategy doesn't mean that it is also costly for more sophisticated investors. And if these guys are pricing your strategy, then you will still not earn higher returns by taking higher risk.

Saturday, 8 March 2014

Every time you do optimal policy, Hayek sends you a greeting card

Whether you work as an economist, a management consultant, a computer scientist, an engineer, or indeed in any other profession in which you have to build some sort of mathematical model and solve some kind of optimality problem, you are often running into a very basic tradeoff. If you make a model that captures your object of study very realistically and in detail, you have a hard time figuring out how to optimise it. And if you make a model that you know to optimise, it is often in some important aspects oversimplified. So if you're an economist, you will be hopeless finding optimal policies in large-scale models of the macroeconomy, while nobody will believe the small-scale models you build to think about optimal policy. If you're a management consultant, you can fit some nonlinear model to explain your customer data very well, but good luck communicating a non-linear business strategy to the CEO of your client. If you're a computer scientist, you know the tradeoff as the curse of dimensionality. If you are an engineer, you know that versatility and precision of a machine usually run in opposite directions.

Essentially, we're facing a tradeoff between calibration and optimisation: models that fit the data or process we're looking at very well are hard to optimise well, and models that are easy to optimise usually don't fit the data well.

While this tradeoff exists almost anywhere, and is really just a consequence of the complexity of the world relative to the cognitive capacity of the human mind, I think it is carries a particular meaning in economics.

We know that under the standard assumptions we make (which are satisfied for example by the RBC model), the first welfare theorem holds. That's great, because the question of what the government should do is clear: nothing. The market allocates all resources efficiently. Okay, but that all markets are efficient is such an old joke that you can't even impress your grandmother with it. The world abounds with physical or pecuniary externalities, asymmetric information, bounded rationality or contract enforceability problems, and economics has demonstrated their existence both theoretically and empirically.

In principle, such market failures open the possibility of corrective government intervention. As economists, we often ask: What would an optimal policy intervention look like? As long as policy is not able to restore market efficiency completely (attaining the "first-best", as we say), this becomes quite a difficult problem. The computational complexity of solving a Ramsey problem is much higher than that of calculating a competitive equilibrium.

The reason that is so, at least in macroeconomics, is Hayek's insight that the market is successful because it distributes information decentrally. In the market, every individual need not know all the circumstances of some change which led to resources being allocated differently, as long as they see the prices in the market. I don't need to know that the implementation of some useful technological discovery requires extentive use of tin in order to give up some consumption of it to make room for that discovery; all I need to know is that the price of tin has gone up. By contrast, a central economic planner would need to aggregate all the dispersed information in the economy in order to know how resources should be allocated optimally. Collecting this dispersed information is very problematic, especially when it is about characteristics such as preferences, which are almost impossible to measure.

When we solve a competitive market equilibrium, we take the same position: we only need to solve optimisation problems for agents taking prices as given. Then we can solve for prices using resource constraints in a final step. By contrast, the Ramsey central planner takes into account the impact of policy on prices, and it is this which makes the Ramsey problem difficult. Now, of course solving for prices is also not always easy, especially with heterogenous agents such as in Krusell and Smith. But try to solve a Ramsey problem in the Krusell-Smith model!

And again, while we can calculate optimal policies elegantly in some models, those particular models are often so simplistic that, at least by my impression, people are more often than not very sceptical about the conclusions.

So every time you despair about a Ramsey or other optimal policy problem in economics, and you find yourself faced with a tradeoff of building a simple model nobody believes or not being able to calculate the optimal policy, Hayek is sending you a greeting card from the past: "Remember that the information contained in prices is difficult to centralise".

Essentially, we're facing a tradeoff between calibration and optimisation: models that fit the data or process we're looking at very well are hard to optimise well, and models that are easy to optimise usually don't fit the data well.

While this tradeoff exists almost anywhere, and is really just a consequence of the complexity of the world relative to the cognitive capacity of the human mind, I think it is carries a particular meaning in economics.

We know that under the standard assumptions we make (which are satisfied for example by the RBC model), the first welfare theorem holds. That's great, because the question of what the government should do is clear: nothing. The market allocates all resources efficiently. Okay, but that all markets are efficient is such an old joke that you can't even impress your grandmother with it. The world abounds with physical or pecuniary externalities, asymmetric information, bounded rationality or contract enforceability problems, and economics has demonstrated their existence both theoretically and empirically.

In principle, such market failures open the possibility of corrective government intervention. As economists, we often ask: What would an optimal policy intervention look like? As long as policy is not able to restore market efficiency completely (attaining the "first-best", as we say), this becomes quite a difficult problem. The computational complexity of solving a Ramsey problem is much higher than that of calculating a competitive equilibrium.

The reason that is so, at least in macroeconomics, is Hayek's insight that the market is successful because it distributes information decentrally. In the market, every individual need not know all the circumstances of some change which led to resources being allocated differently, as long as they see the prices in the market. I don't need to know that the implementation of some useful technological discovery requires extentive use of tin in order to give up some consumption of it to make room for that discovery; all I need to know is that the price of tin has gone up. By contrast, a central economic planner would need to aggregate all the dispersed information in the economy in order to know how resources should be allocated optimally. Collecting this dispersed information is very problematic, especially when it is about characteristics such as preferences, which are almost impossible to measure.

When we solve a competitive market equilibrium, we take the same position: we only need to solve optimisation problems for agents taking prices as given. Then we can solve for prices using resource constraints in a final step. By contrast, the Ramsey central planner takes into account the impact of policy on prices, and it is this which makes the Ramsey problem difficult. Now, of course solving for prices is also not always easy, especially with heterogenous agents such as in Krusell and Smith. But try to solve a Ramsey problem in the Krusell-Smith model!

And again, while we can calculate optimal policies elegantly in some models, those particular models are often so simplistic that, at least by my impression, people are more often than not very sceptical about the conclusions.

So every time you despair about a Ramsey or other optimal policy problem in economics, and you find yourself faced with a tradeoff of building a simple model nobody believes or not being able to calculate the optimal policy, Hayek is sending you a greeting card from the past: "Remember that the information contained in prices is difficult to centralise".

Tuesday, 11 February 2014

cyberloafing is the new procrastination

Lucy Kellaway is presenting some apps today to help you reduce your online procrastination, or as it is now called, cyberloafing. She quotes an analogy by David Ryan Polgar that compares surfing the web with eating junk food:

"He says we are getting mentally obese: we binge on junk information, with the result that our brains become so sluggish they are good for nothing except more bingeing."So stop reading this blog, and let's get back to work.

Saturday, 8 February 2014

Take stupidity into schools

I am writing this post while reading Daniel Kahneman's "Thinking Fast and Slow", a summary of research on behavioural choice. It's a great book! And reading it actuallty makes me angry about the education system. The findings it presents, especially on cognitive biases, are of enormous importance in everybody's lives, and yet I do not recall anybody ever telling me about them. I feel this is a major deficiency.

If you haven't read the book, and if you don't have a clear idea about expressions like availability heuristic, survivorship bias, decoy effect, and framing effect, then you are not alone. Luckily though, the ideas are easy to grasp and familiar to most people. For example, the framing effect is simply the tendency of people to see identical information less or more favourably dependent on the context in which it is delivered. People are a lot more likely to endorse a certain medication when told "it will have the desired effect 60% of the time" than when told "it will not have the desired effect 40% of the time". Stupid, right? But it is a well-documented empirical regularity in human behaviour.

Now even if you didn't know the word "framing effect", you would maybe say that you know this already. Of course people get tricked all the time. But what Kahneman repeatedly notes in his book is that, although we may know about these biases, we don't think they apply to us personally. Surely, if we were presented with the 60%-40% example above, we think we won't make this easy mistake. And yet we will. Over and over again, even when presented with evidence to the contrary. In fact, in many of the studies Kahneman documents, test subjects who were shown recordings of themselves making clearly biased judgements refused to believe that the recordings were genuine!

The important point to realise, I think, is that these cognitive biases are not just curiosities that make for good cocktail party conversation. They constantly lead to us making decisions that we will regret, and for society as a whole, cause huge inefficiencies. Their effect is pervasive. Whether we are aware of them and how we deal with them matters: Each time we buy a suit. Each time we buy an insurance policy. And each time we sell a suit or an insurance policy, manage our finances, or go to a job interview. In each case, the more biases are at play, the more likely the outcome is inefficient for society, since we end up buyings things we don't need, selling things other people don't need, not getting the job we are made for, hiring someone who isn't made for the job etc.

This is not even to mention the impact of cognitive biases on election outcomes in democracies. History abounds with examples of voters electing demagogues, and every politician knows: to win votes is to exploit voters' cognitive biases. This vulnerability of the democratic system is inherent in its construction. Ultimately, the faith in democracy stems from the belief that every citizen is rational enough to be able to decide on his or her government. Seeing the citizen in this way was a major achievement of the Enlightenment. But in soem circumstances it might be too idealistic.

Fortunately, something can be done about this deficiency. We need to anchor the teaching of "practical psychology" in the school curriculum to ensure every citizen is equipped with the knowledge of his or her stupidity. By "practical psychology", I mean those insights from psychology which concern our every day lives, including the study of cognitive biases, but also best practices on how to make decisions, memorise things or rid oneself of bad habits. More remote fields such as dream interpretation, pathologies or psychotherapy would be excluded.

Is this a sensible proposal? I think it is, but there are at least four main ways to attack it.

Attack 1: Cognitive biases don't really matter. People frequently make mistakes, but they err on both sides. Sometimes they will be led to buy a bit too much and sometimes a bit too little. On average, that just washes out.

Defense: Especially economists have a deep belief in this kind of argument. Just about any economic model is built on the ideal of the rational agent with infinite information processing capacity, and the evidence presented by Kahneman and others can only be reconciled with this ideal if deviations from it are subject to some law of large numbers. But it is far from clear that these deviations really have zero mean. Sellers are usually better at manipulating buyers than the other way around. The mere existence of consumer protection testifies to this. Also, some decisions are made once-in-a-lifetime, like buying a house. The mistake you make on that one occasion is very relevant to you as an individual, even if across individuals the mistakes average out.

Attack 2: Teaching this stuff in school won't improve people's behaviour. Kahneman himself says that cognitive biases are almost impossible to overcome.

Defense: This is probably true (although apparently there exist various attempts at teaching "debiasing"). Still, this does not mean that knowledge of such "practical psychology" won't improve our society. Even though I am not able to overcome the psychological sales tactics of my banker, I can still insist to call him back about my investment decision after a good night's sleep, even if at the moment to me his case appears fully waterproof.

Attack 3: This would be a good idea, but you can't teach this in school. It is about being streetsmart, and either you get that or you don't.

Defense: This is a very powerful argument. I forgot most of my school curriculum already. So why would pupils remember cognitive biases? But I think this is so close to our lives that it would stick. We could make the teaching very practical, very example-based. Besides, I recall so many debates that we were required to make in various subjects. Had I known about the psychology behind it, the techniques of persuasion, I would have been much more interested in them.

Attack 4: You cannot teach everything in school. Because of the limited time the pupils have, choices need to be made. This is simply not as important as other subjects.

Defense: By that logic, geography doesn't belong in the school curriculum at all.

No, this is a sensible proposal with huge upside. Adolescents in school don't only need to know the facts about the world, they also need to know how not to screw up. The introduction of financial literacy courses in Britain is a step in the right direction. When people get tricked by their bankers, the solution is neither to sob about declining moral values, nor to construct a nanny-state which regulates every aspect of consumer finance, but to empower people to make better decisions (even though the usual suspects disagree).

On a broader scale, our school curriculum is extremely slow-moving, at least in my home country Germany. In many parts it still seems to represent education ideals of the 19th century. Why do they make our children remember poems by heart? Regurgitate the list of Egyptian pharaos or Presidents of the United States? You can easily find out about this on Wikipedia. What I think is more important in a world where the individual has to take on ever more decisions is to teach people from an early age that everybody around them is stupid, that they themselves are no better, and what they can do about it.

Subscribe to:

Posts (Atom)